February 11, 2026

Many employees turn to AI tools because they make work easier, but when they do so without informing IT or leadership, Shadow AI quietly spreads within the organization. This hidden practice often begins with good intentions yet quickly leads to serious issues like data leak, unexpected expenses, and compliance violations that affect companies.

Shadow AI starts small when someone tries a free chatbot and shares it with coworkers. Soon, it spreads across teams. Owing to which, sensitive company data ends up on outside servers.

Let’s explore the concept in detail, explaining exactly what it involves and the practical solutions, say putting a cybersecurity software in place, any business can resort to in order to address it effectively.

Imagine a marketing manager who needs fresh ideas for blog posts, so she opens a web-based AI writing tool, enters company-specific details, and receives instant drafts without anyone in IT ever knowing about it.

This simple scenario captures the essence of Shadow AI, which occurs whenever employees adopt AI applications without obtaining approval from the company’s IT or security teams. These tools, ranging from chat services and image generators to data analysis platforms, typically operate on external servers where workers upload sensitive information such as customer lists, sales reports, or internal documents.

The term Shadow AI draws directly from the earlier concept of shadow IT, where staff used unapproved software in years past, but today’s version accelerates because new AI options launch daily with sign-ups that take only seconds and require no formal checks.

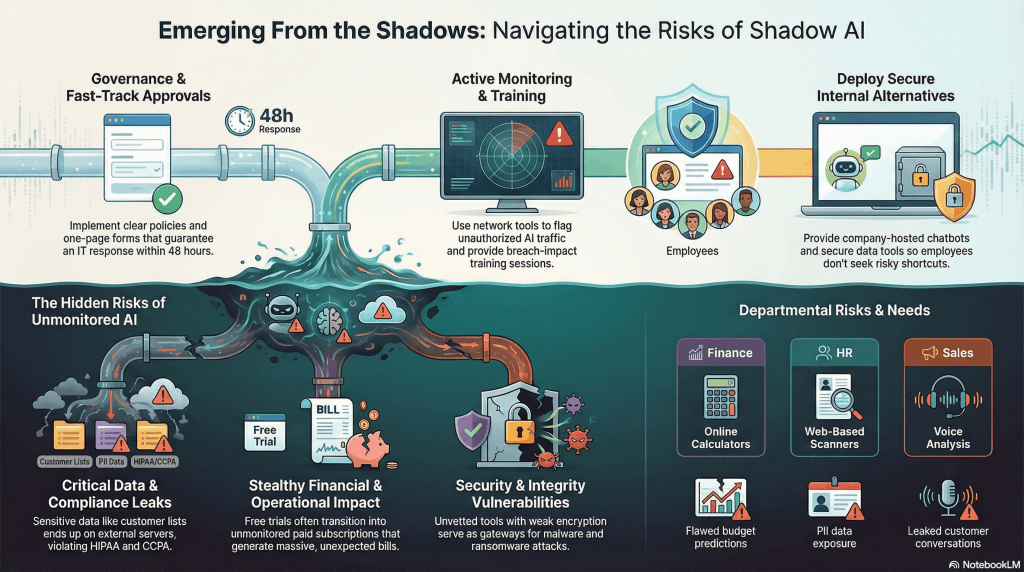

Recent industry reports indicate that a significant percentage of U.S. employees use unapproved AI tools at work due to their easy availability. Finance teams, for instance, often run budget predictions through online calculators and HR departments, on the other hand, sort resumes with web-based scanners. Sales groups, too, use their voice analysis services to summarize customer calls, creating a web of unmonitored AI activity.

Managers often miss early shadow AI because no clear alerts appear, but signs later show through odd web traffic, unexpected cloud bills, or unfamiliar reports that trigger deeper checks. Without centralized AI governance policies, organizations lose visibility into how sensitive data is processed, stored, and transmitted across external AI platforms.

Shadow AI does not emerge suddenly but rather develops gradually from genuine workplace frustrations where employees face pressing deadlines and find official company systems either too burdensome to meet immediate needs.

When approved AI tools sit stuck in lengthy approval processes or carry high costs, workers naturally move toward free or low-cost alternatives available directly through web browsers.

This availability of browser-based sign-ups that demand no credit cards or approvals, combined with mobile apps that function anywhere, makes shadow AI easy to adopt on personal devices. Internal delays like forgotten IT forms, tight budgets, and pressure for quick results further make things worse, pushing staff to share tool recommendations in chats or casual talks.

Even AI providers contribute by marketing directly to end-users with targeted ads and free trials that promise instant productivity gains, embedding shadow AI deeply into daily operations.

Shadow AI poses a range of risks for companies across the globe. Data loss stands out as the most immediate threat, with company emails, contracts, and client records getting leaked. This significantly increases the risk of non-compliance with U.S. data protection laws such as HIPAA, CCPA, and other industry-specific regulations.

Financial impacts accumulate stealthily too as free service tiers reach their limits, making teams unknowingly subscribe to paid plans that generate huge bills months later. Inaccurate outputs from unreliable sources act as threat too, as finance teams build reports on flawed predictions, marketing launches misguided campaigns, and clients leave when errors become apparent. This erodes business reputations over time.

Moreover, unmonitored AI use can compromise compliance with regulations such as HIPAA in healthcare and SEC requirements in finance, potentially leading to penalties and reputational damage.

Security vulnerabilities pile up too owing to weak logins, no encryption, and hidden malware that turn one unsafe tool into a gateway for ransomware across the network.

Workflow disruptions are yet another risk it poses. These arise when departments rely on mismatched AI platforms, forcing constant data reconciliation that slows projects.

Companies counter shadow AI through sundry approaches. It begins with a single company-wide email asking employees to list their current AI tools. This shows the problem’s size and should be followed by short 15-minute training sessions that make real-world breach impacts clear and unforgettable.

Clear policies banning AI without IT approval, shown on desktops and backed by easy one-page forms with 48-hour responses, can further cut the urge to bypass official channels. Providing good internal alternatives proves most effective, such as deploying company-hosted chatbots for call summaries, secure resume scanners for HR, and reliable budget tools for finance, all accessible at no extra cost.

Network monitoring tools can be of great help too. For these flag AI-related traffic, automatically block high-risk domains, scan devices for unauthorized applications, and analyze cloud logs for unusual patterns. All of this and more to offer visibility that reveals issues weekly rather than after damage occurs.

Monthly team check-ins reveal delays, letting IT speed approvals and reward staff who report hidden tools. Deals with trusted AI vendors using US data centers and strict contracts can further help keep data secure.

Conclusion

Shadow AI, all in all, puts companies at a great risk of data leaks, rising costs, broken rules, and lost trust. Smart leaders can stop this by listening to teams, approving safe tools in time, monitoring closely, and giving secure options so staff don’t need risky shortcuts.

For only safe AI can help your business grow and Techjockey can help you achieve just that with its dynamic range of cybersecurity software offerings.

So, what’s the wait for? Give our team a call today itself and save your company from the evil shadows of shadow AI.

It can cause data leaks, security breaches, compliance violations, hidden costs, and inaccurate business decisions.

Organizations can set clear AI policies, offer approved secure tools, train employees, and use monitoring software.

Yes, inaccurate AI outputs or leaked data can harm customer trust and damage a business’s credibility.

They detect unauthorized AI usage, block risky domains, and alert IT teams before major security issues arise.

Unusual web traffic, unknown reports, surprise cloud charges, and new tool patterns often reveal hidden AI usage.

Explore Topics

Consult with Our Techjockey Expert

Connect for fast and scalable software delivery, corporation plans, advanced security, and much more.

Compare Popular Software

Get the latest Techjockey US Blog Updates!

Subscribe to get the first notified about tech updates directly in your inbox.