What Is AI Observability? Key Components, Pillars, and Tools

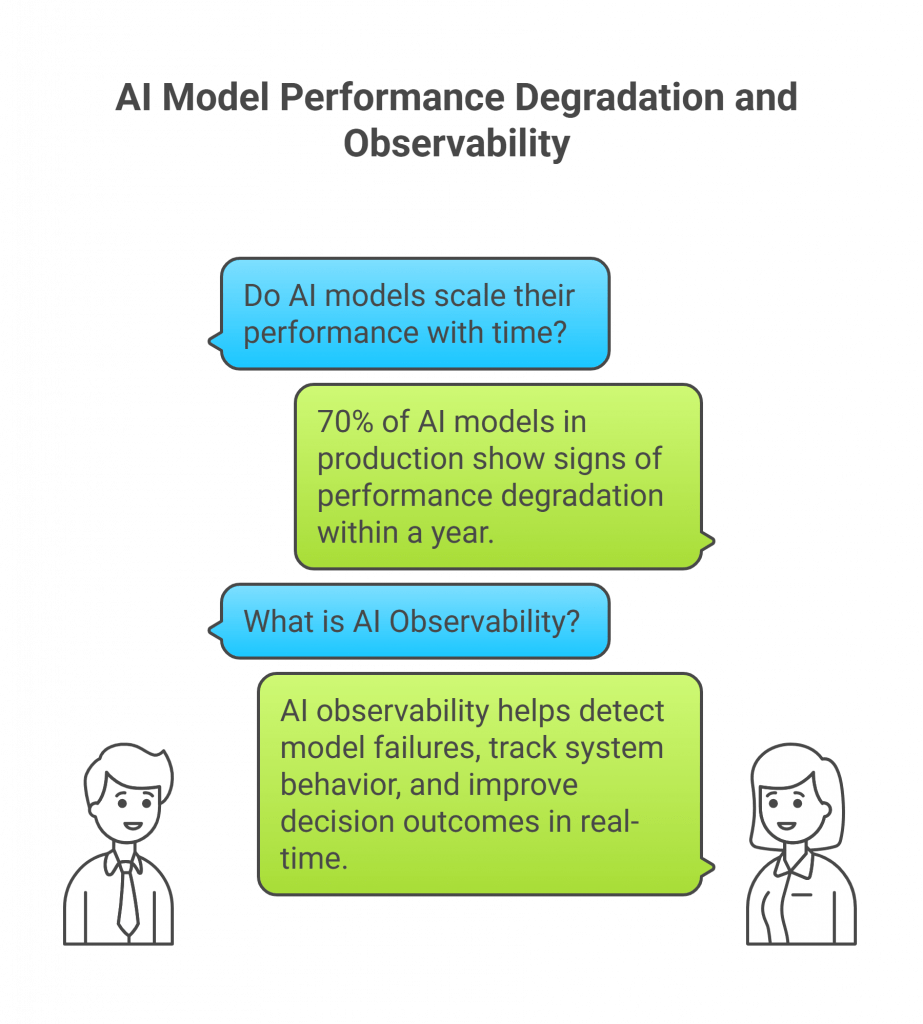

Businesses are nowadays rushing to adopt AI and build powerful models. But do these scale their performance with time?

According to a 2023 Gartner report, 70% of AI models in production show signs of performance degradation within a year.

Thus, you need to plan smartly and monitor your model to avoid any degradation risks. You can do this with ‘AI Observability’. What’s this now?

AI observability is a modern method that helps you detect model failures, track system behavior, and improve decision outcomes. You can check in real-time what your AI model is doing.

Let’s break it down and make it easier to understand.

What is AI Observability?

Artificial Intelligence observability means getting clear, contextual, and continuous information to understand the performance, behaviour, and outcome of artificial intelligence systems. On the other side, the traditional monitoring just focuses on metrics like uptime or error rates.

With AI observability, tech teams get insights into many aspects, like:

- Why is the model behaving like this

- How can it change over time

- What issues can arise throughout the whole AI stack?

This kind of visibility is necessary in today’s world, where the models are non-deterministic, the data pipelines are complex, and infrastructure demands are high.

In contrast to basic observability, AI observability spans:

- Model layers, which offer visibility into latency, accuracy, and fairness

- Semantic and orchestration layers, such as how LLMs retrieve and generate content

- Feedback mechanisms, which capture real user interactions to improve future responses

Why AI Observability Is Crucial Today?

AI is now not only restricted to experimental labs or research settings, but it has entered the enterprise operations in many industries. AI systems are already in full deployment, either as virtual assistants or fraud detection, or autonomous machines and recommendation engines.

But a too fast mass adoption of anything brings new challenges, thus, AI also comes with them:

- Non-deterministic model behavior: Most AI systems (particularly those based on large language models (LLMs)) do not produce the same output given the same input, depending on context, phrasing, or underlying data changes. With this, it becomes difficult to predict that something has gone wrong.

- Frequent Version updates: Machine learning models are frequently retrained or fine-tuned. Lacking observability, one can never know whether versions bring improvements in performance or cause regressions.

- Data and model drift: Models might behave unpredictably or give wrong results with shifts in the distribution of data over time. This drifting should be identified in real time with continuous monitoring and responses.

- Complicated training and inference pipelines: AI systems tend to rely on multi-stage pipelines that include data ingestion, preprocessing, model inference, and post-processing. It makes it difficult to know the errors until you have deep visibility.

That is why AI observability is necessary to overcome these challenges. It helps teams to:

- Understand the behavior of the model under varying conditions

- Ensure the system performance at scale and reliability

- Be on track with changing regulations

- Establish trust with transparency

When there is strong AI observability, then organizations can boldly expand its AI initiatives without too much risk and with the best returns.

Key Components of AI Observability

AI observability is a combination of many layers of data and explanations that allow teams to have a better picture of how AI systems work, how to debug and optimize them. Key components are:

- Telemetry (MELT): It’s about gathering and examining Metrics, Events, Logs, and Traces in order to learn what is happening and why.

- Application monitoring: Monitoring aspects such as token use, model versioning, retrieval-augmented generation (RAG) performance, and interactions with vector databases.

- Monitoring Infrastructure: Monitoring the use of GPUs, their thermal conditions, and high-bandwidth network interconnects that are essential to AI loads.

- Model Performance Metrics: Tracking accuracy, latency, fairness metrics, and prediction confidence, to gauge performance in the real world.

- Exploratory Querying, Visualizations: Dashboards and high-dimensional queries to allow identifying patterns and exploring edge cases.

- Alerting & Correlation: Real-time detection of anomalies and reduction of alert fatigue through smart alert grouping and context-aware insights.

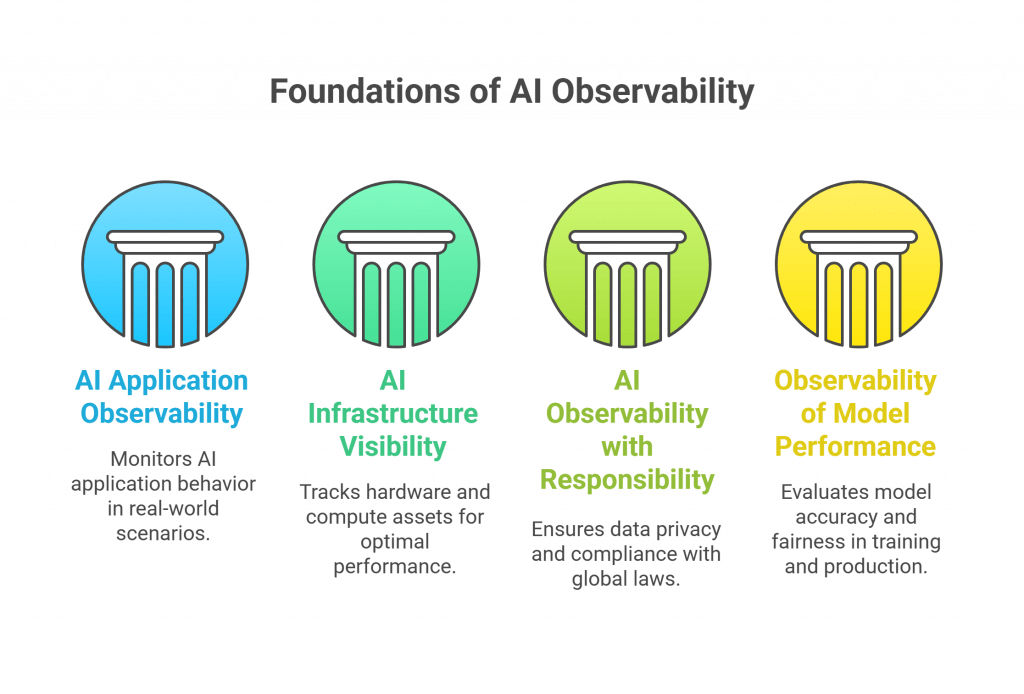

The Four Pillars of AI Observability

AI observability works around four foundational areas that focus on different aspects of managing AI systems.

1. AI Application Observability

- This is the first pillar that focuses on how AI applications behave in the real world.

- It helps you monitor LLM workflows, the users’ feedback, like drop-off rates, and toolchain usage.

- Some open tools like OpenLLMetry and OpenLIT (built on OpenTelemetry) are making it easier to monitor these AI-specific metrics.

2. AI Infrastructure Visibility

- It monitors the state and status of the hardware and compute assets used for training and performing inference.

- It covers GPU use, memory throughput, PCIe speeds, and energy usage.

- It also maximizes networking in distributed systems to prevent sync issues.

3. AI Observability with Responsibility

- This part tracks data privacy issues, checks for prompt injection or misuse, and makes sure you’re following global laws like GDPR and HIPAA.

- It also logs everything, so your team can easily run audits if something goes wrong.

4. Observability of Model Performance

- Here, it focuses on the performance of models in training and production.

- It tracks important metrics like accuracy, drift, and fairness. If the model starts giving biased results or behaving unpredictably, this layer helps you catch and fix it early.

- You can even pair it with explainability tools to better understand why your model made a certain decision.

Popular AI Observability Tools

Evidently AI

Evidently AI is an open-source tool that gives you easy-to-read dashboards to track data drift, model performance, and data quality. It helps teams catch issues early to keep your models accurate and trustworthy over time.

Evidently AI

Starting Price

$ 100.00

Metaplane

Metaplane focuses on data observability and keeps a close eye on your data pipelines. It alerts you about any schema change or unusual data behavior, so your ML models always have reliable input.

Metaplane

Starting Price

$ 10.00

Confident AI

Confident AI helps you understand where your models might fail, especially on edge cases. It predicts potential breakdowns and flags high-risk decisions; thus, it helps you build accurate AI models.

Confident AI

Starting Price

$ 19.99

The Future of AI Observability

With the AI adoption becoming intense, observability will form the basis of building trusted, compliant, and scalable systems.

Observability AI can facilitate:

- Safe and ethical scaling of AI in production environments.

- Constant adherence to the changing AI regulations and data governance practices.

- Meaningful, actionable insights that guarantee long-term model performance.

Companies that incorporate observability in their AI stack now will be in a better position to handle the complex AI environment.

Conclusion

AI observability is essential for scaling AI systems with confidence. As models move into production, traditional monitoring falls short. AI observability tools can be useful for the tech team to track performance, detect failures, and ensure ethical AI operations.

By blending system-level data with model-specific insights, AI observability gives you a clear view of real-time events happening inside your AI stack.

In short, if you’re deploying AI at scale, AI observability should be a critical part of your operations

Mehlika Bathla is a passionate content writer who turns complex tech ideas into simple words. For over 4 years in the tech industry, she has crafted helpful content like technical documentation, user guides, UX content, website content, social media copies, and SEO-driven blogs. She is highly skilled in... Read more